To understand the extent of these risks, we engaged over 50 experts from domains such as AI alignment risks, cybersecurity, biorisk, trust and safety, and international security to adversarially test the model. However, the additional capabilities of GPT-4 lead to new risk surfaces. GPT-4 poses similar risks as previous models, such as generating harmful advice, buggy code, or inaccurate information. We’ve been iterating on GPT-4 to make it safer and more aligned from the beginning of training, with efforts including selection and filtering of the pretraining data, evaluations and expert engagement, model safety improvements, and monitoring and enforcement. However, through our current post-training process, the calibration is reduced. Interestingly, the base pre-trained model is highly calibrated (its predicted confidence in an answer generally matches the probability of being correct). GPT-4 can also be confidently wrong in its predictions, not taking care to double-check work when it’s likely to make a mistake.

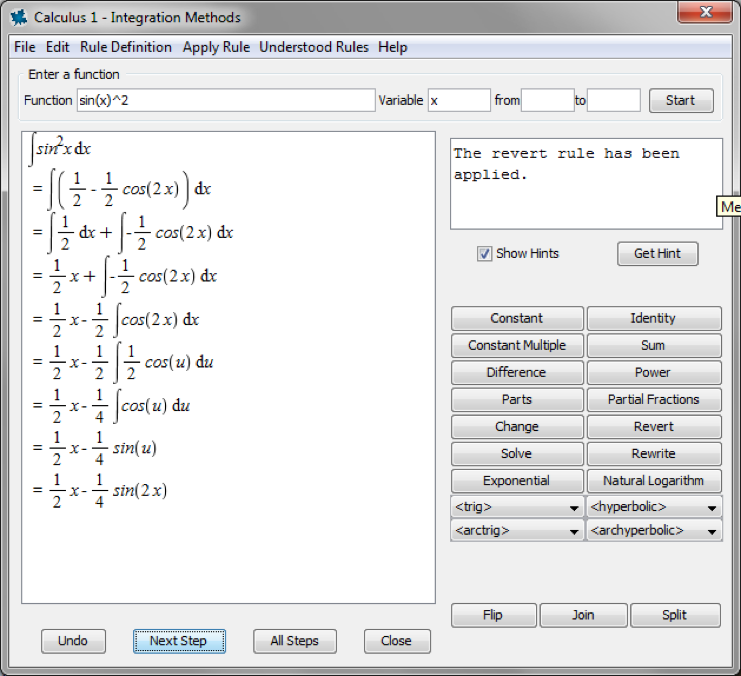

#Local calculus tutor code

And sometimes it can fail at hard problems the same way humans do, such as introducing security vulnerabilities into code it produces. It can sometimes make simple reasoning errors which do not seem to comport with competence across so many domains, or be overly gullible in accepting obvious false statements from a user. GPT-4 generally lacks knowledge of events that have occurred after the vast majority of its data cuts off (September 2021), and does not learn from its experience. Per our recent blog post, we aim to make AI systems we build have reasonable default behaviors that reflect a wide swathe of users’ values, allow those systems to be customized within broad bounds, and get public input on what those bounds should be.

The model can have various biases in its outputs-we have made progress on these but there’s still more to do. Medical Knowledge Self-Assessment Program Graduate Record Examination (GRE) Writing Graduate Record Examination (GRE) Quantitative We’re also open-sourcing OpenAI Evals, our framework for automated evaluation of AI model performance, to allow anyone to report shortcomings in our models to help guide further improvements. To prepare the image input capability for wider availability, we’re collaborating closely with a single partner to start. We are releasing GPT-4’s text input capability via ChatGPT and the API (with a waitlist). As we continue to focus on reliable scaling, we aim to hone our methodology to help us predict and prepare for future capabilities increasingly far in advance-something we view as critical for safety. As a result, our GPT-4 training run was (for us at least!) unprecedentedly stable, becoming our first large model whose training performance we were able to accurately predict ahead of time. We found and fixed some bugs and improved our theoretical foundations. A year ago, we trained GPT-3.5 as a first “test run” of the system. Over the past two years, we rebuilt our entire deep learning stack and, together with Azure, co-designed a supercomputer from the ground up for our workload. We’ve spent 6 months iteratively aligning GPT-4 using lessons from our adversarial testing program as well as ChatGPT, resulting in our best-ever results (though far from perfect) on factuality, steerability, and refusing to go outside of guardrails. For example, it passes a simulated bar exam with a score around the top 10% of test takers in contrast, GPT-3.5’s score was around the bottom 10%.

#Local calculus tutor professional

GPT-4 is a large multimodal model (accepting image and text inputs, emitting text outputs) that, while less capable than humans in many real-world scenarios, exhibits human-level performance on various professional and academic benchmarks. We’ve created GPT-4, the latest milestone in OpenAI’s effort in scaling up deep learning.

0 kommentar(er)

0 kommentar(er)